Jenkins is the leading open source automation server which provides hundreds of plugins to support building, deploying and automating any project. In this article, we would like to take a closer look on how we significantly reduced testing times in our Jenkins setup.

emnify runs a custom-built mobile core network as software on Amazon Web Services. Since we have a complete software stack under our control, we implemented unique features giving our customers the power to configure mobile networks according to their needs through both, the Web UI and directly via the REST API.

Our platform provides connectivity for our customers' IoT devices distributed around the globe and used for a multitude of exciting use cases. Therefore, writing automated tests to ensure correct functionality is our daily bread and butter. The granularity of these tests allows us to deliver frequent updates without the need for routine manual testing. However, over the course of the last three years, since emnify was founded, our team created so many complex automated tests that the overall execution time grew to an unacceptable duration of more than 2 hours.

As we were no longer willing to wait that long for feedback after filing a pull request, we invested some time in improving our Jenkins test setup with the ambitious goal: running all tests within 30 minutes.

Problem Statement

emnify core application is based on Akka, a JVM-based implementation of the Actor Model. This application runs on multiple Akka nodes with different roles, implementing the functionalities that we need in order to run our mobile core network, like the Home Location Register (HLR) or gateways forwarding all user traffic.

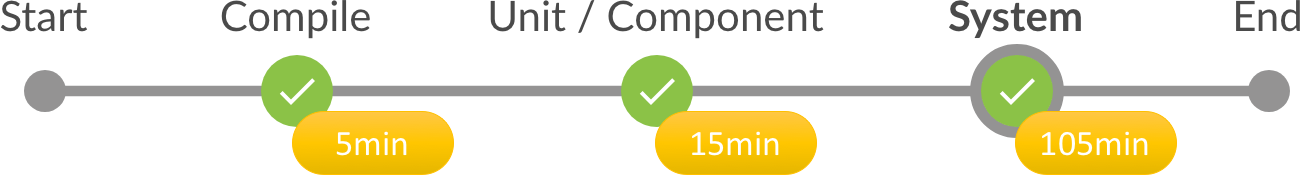

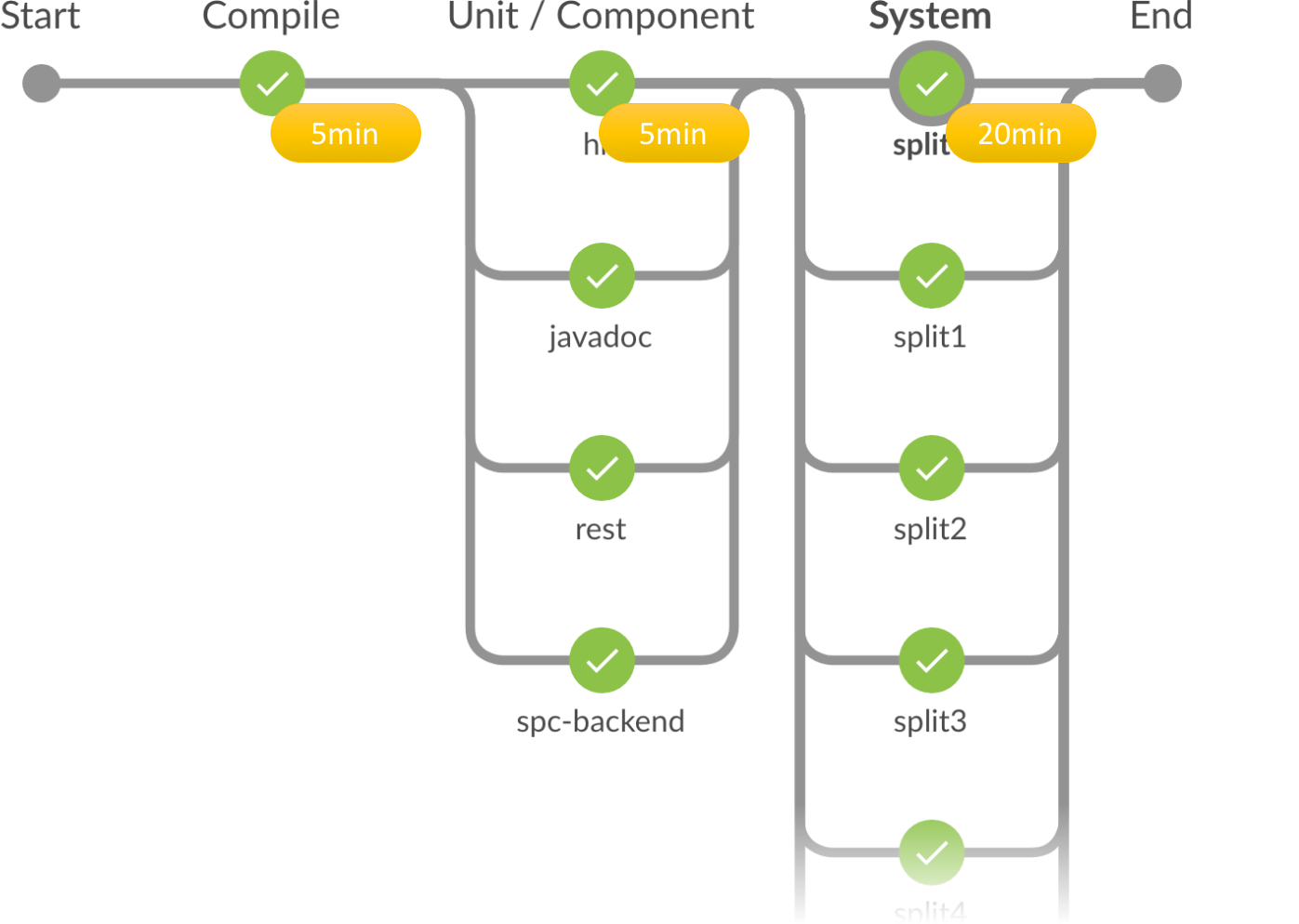

The pipeline executing the test suite in Jenkins looks as follows - mind the annotated runtime:

- 1400+ unit and integration tests targeting only a single node with a runtime of ~15min

- 200+ end-to-end system tests with a runtime of ~105min, the longest of them taking 5-10min

The main reason for this enormous runtime of the system tests is that the different Akka nodes implementing various entities of the mobile core network are spun up dozens of times with different configurations. In addition to the end-to-end tests for our REST API, the usage of an emulator allows us to confirm the correct communication with other mobile network operators using protocols like MAP and GTP.

As these tests provide enormous value to our development team, we cannot simply remove any of them. The easiest option to reduce the time until we get feedback from Jenkins is to run these tests in parallel on multiple build agents.

Updates to the Jenkins Setup

While overhauling our Jenkins setup, we recently introduced the following changes:

- Our Jenkins master runs as Docker container in our existing AWS Elastic Container Service (ECS) cluster

- Build agents are launched by the Jenkins master on demand using the Amazon EC2 plugin

- Jenkins Pipelines allow us to define the build pipeline as code and version it together with the application. Also, new branches automatically appear as separate jobs in Jenkins. As enough has been said (including by ourselves) about Jenkins Pipelines already, we assume familiarity of the reader in the following.

Parallelizing Unit/Component Tests

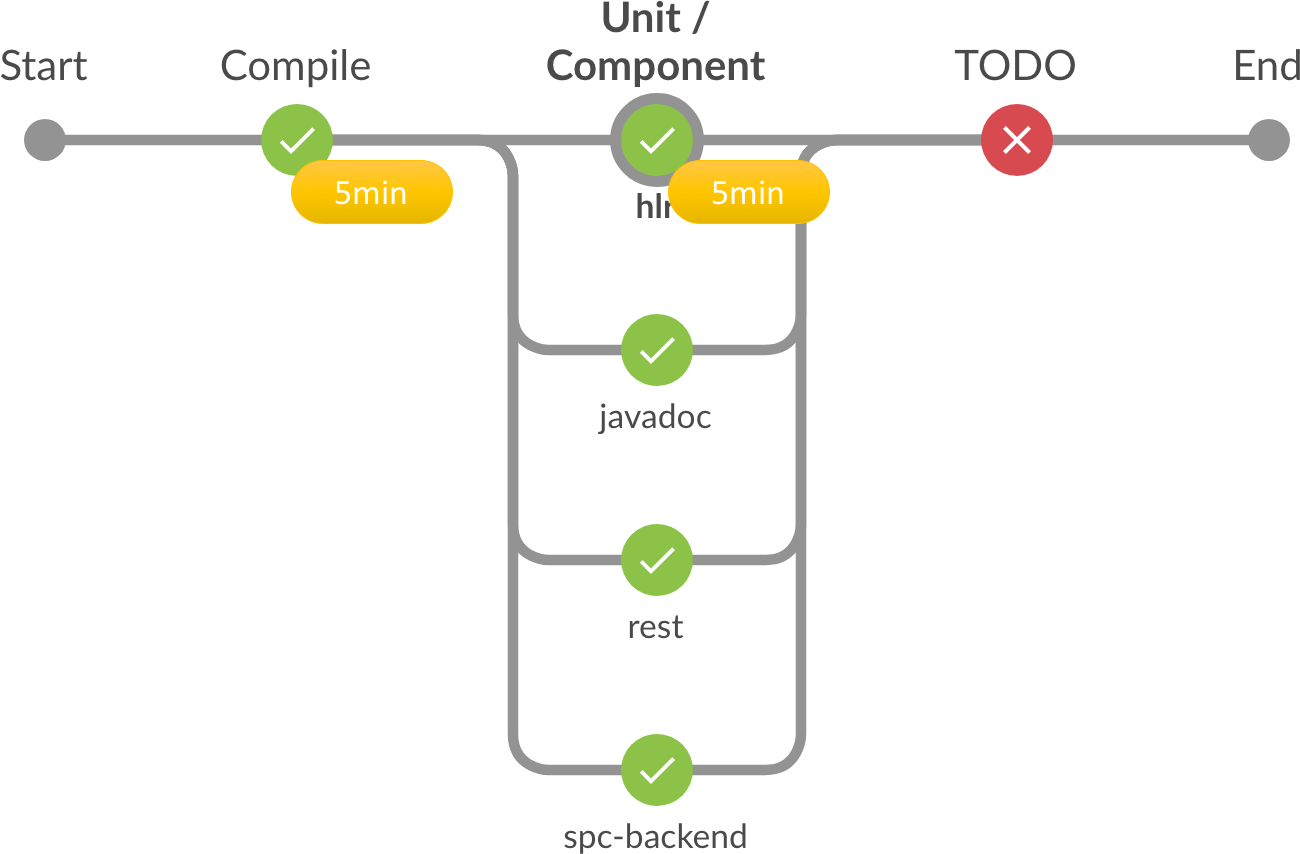

To parallelize this first part of the tests, we followed the simple approach using Jenkins Pipeline's parallel step with manually defined branches:

- hlr: runs the tests for our HLR

- spc-backend: runs the tests for our backend nodes

- rest: runs unit/component tests for the remaining Maven projects

- javadoc: generates JavaDoc

The latter two parallel branches are both executed on the build agent, which checked out the Git repository, as JavaDoc generation requires the source code (and it runs within a minute, which is the reason, why we can run other tests in parallel). The build artifacts and dependencies stored in the Maven repository (usually ~/.m2/ ) are configured to be located within the workspace and transferred from the agent executing the build to the others using the stash / unstash steps. The resulting, (simplified) pipeline code looks as follows:

// allocate a build agent

node {

stage('Compile') {

checkout scm

mvn "--threads 1C clean install -DskipTests"

}

// copy workspace data to the master so that other slaves can pick it up

echo "Stashing pom"

stash name: 'pom', includes: "**/pom.xml"

echo "Stashing target"

stash name: 'target', includes: '*/target/**', excludes: '*/target/*.tar.gz'

echo "Stashing sql"

stash name: 'sql', includes: '*/src/**/*.sql'

echo "Stashing local maven repo"

stash name: 'repo', includes: '.repository/**'

stage('Unit / Component') {

parallel(

"rest": {

mvn "verify --projects !spc-backend,!hlr,!esc-test"

},

"javadoc": {

// javadoc needs to be built on the agent running the build, as we don't have sources later

mvn "javadoc:javadoc"

},

"spc-backend": {

// allocate additional build agent

node {

// get the workspace contents back from the master

unstash 'pom'; unstash 'sql'; unstash 'target'; unstash 'repo'

mvn "verify --projects spc-backend"

}

},

"hlr": {

// allocate additional build agent

node {

// get the workspace contents back from the master

unstash 'pom'; unstash 'sql'; unstash 'target'; unstash 'repo'

mvn "verify --projects hlr"

}

}

)

}

stage('TODO') { error "Next section..." }

}

def mvn(params) {

withMaven([mavenLocalRepo: '.repository']) {

sh "mvn ${params}"

}

}

This is the resulting pipeline, executing within roughly 5 minutes per stage:

Parallelizing System Tests using the Parallel Test Executor Plugin

Moving on to the next stage, we needed to parallelize the end-to-end tests declared in our esc-test project. Given the 30 minute goal, we have roughly 20 minutes left to execute tests worth over 100 minutes runtime. In order to limit the Maven Failsafe Plugin to perform only a subset of all tests, one has to create inclusion/exclusion lists so that each of the build agents runs mvn verify only for the specified subset. Such lists could be generated based on the JUnit test results using two ways:

- Manually split up all tests and commit these lists next to the Jenkinsfile into our repository. These lists would have to be updated once in a while

- Use the Parallel Test Executor plugin to do it automatically

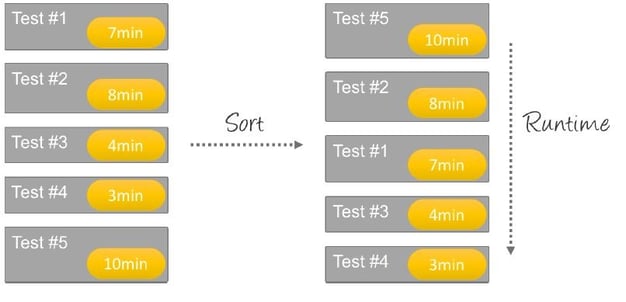

Of course, we chose the latter option, as the Parallel Test Executor plugin provides great value, despite the lack of wide adoption (with only ~1000 installs). This blog article in the Jenkins blog gives a nice introduction. During the build, the plugin scans through previous builds and reads the JUnit test results from Jenkins. Based on the individual test duration that it finds there, it generates lists specifying the tests for the particular splits. These are later passed to the respective Maven commands on the n build agents. The implemented greedy Knapsack process will be illustrated in the following (assuming n=3):

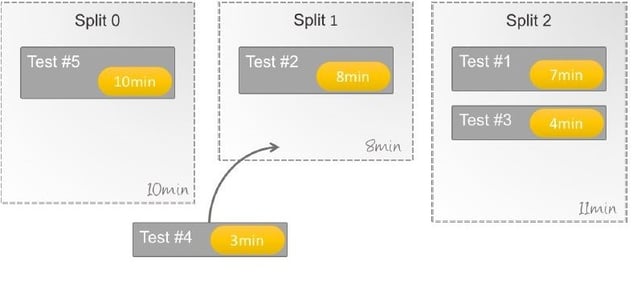

- Retrieve the JUnit test results from one of the previous builds and order the list of tests descending by execution time:

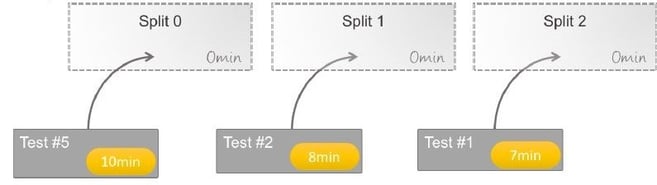

- Set up n=3 buckets and assign tests in established sort order:

- Continue assigning tests one-by-one to the bucket containing the lowest runtime:

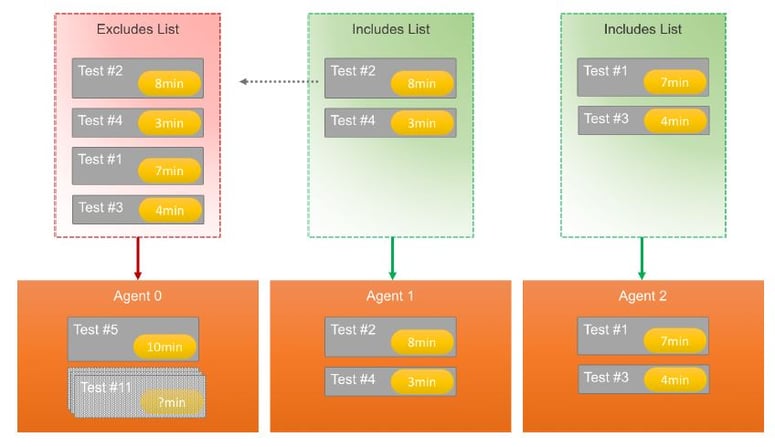

- Once all tests are split up among the n buckets, these lists are written on the disk. Later, each of the build agents running in parallel will pick up one of these lists. However, there is one thing to mention: n-1 agents get an includesList supplied, while one agent gets an excludesList containing exactly the tests listed in the n-1 includes lists, i.e., Test #5 occurs nowhere. This ensures that tests added in the current build are executed exactly once, like the Test #11. Generating only include lists would effectively ignore all new test cases.

The code in our pipeline matches the example given in the aforementioned blog post, but using -Dfailsafe.includesFile instead of -Dsurefire.includesFile.

The result looks as follows:

split0 gets the excludesList supplied, while all other build agents execute tests based on an includesList . By specifying 10 buckets/splits for the splitTests step, we can execute all our tests within 20 minutes:

// how many parallel paths

def parallelism = [$class: 'CountDrivenParallelism', size: 10]

// partition the list of tests

def splits = splitTests parallelism: parallelism, generateInclusions: true

for (int i = 0; i < splits.size(); i++) {

/* Loop over each record in splits to prepare the testGroups that we'll run in parallel. */

/* Split records returned from splitTests contain { includes: boolean, list: List }. */

/* includes = whether list specifies tests to include (true) or tests to exclude (false). */

/* list = list of tests for inclusion or exclusion. */

/* The list of inclusions is constructed based on results gathered from */

/* the previous successfully completed job. One additional record will exclude */

/* all known tests to run any tests not seen during the previous run. */

branches["split${i}"] = {

// allocate another agent

node(nodeLabel) {

// get the workspace contents back from the master

unstash 'pom'; unstash 'sql'; unstash 'target'; unstash 'repo'

populateDatabase()

List mvnVerifyOpts = []

/* Write includesFile or excludesFile for tests. Split record provided by splitTests. */

/* Tell Maven to read the appropriate file. */

if (split.includes) {

def path = splitFilePath('includes', i)

// lists include .java and .class -> number of tests * 2

echo "Writing includesFile for ${split.list.size()/2} tests"

writeFile file: path, text: split.list.join("\n")

mvnVerifyOpts += "-Dfailsafe.includesFile=${path}"

} else {

def path = splitFilePath('excludes', i)

echo "Writing excludesFile for ${split.list.size()/2} tests"

writeFile file: path, text: split.list.join("\n")

mvnVerifyOpts += " -Dfailsafe.excludesFile=${path}"

}

}

}

// submit the 'branches' map to the parallel step to actually execute it

parallel branches

}

AWS Spot Instances as Build Agents

As mentioned above, our build agents run on AWS EC2 and are launched on demand. These build agents are not required to be highly available, so we can safely use the much cheaper spot instances, which reduce the costs to about 1/8th of the regular "on-demand" price. Regarding the usage of the Amazon EC2 plugin, we find the following facts noteworthy:

- Building AMIs using HashiCorp's Packer is a breeze. We just applied our existing Ansible playbooks.

- The plugin handles spot instances just as perfect as on-demand instances. Tick the magic Use Spot Instance check box and enter the maximum price you're willing to pay. Having the startup scripts described in the plugin documentation (Configure AMI for Spot Support section) is not required in the AMIs, as Jenkins just logs in via SSH.

- Ask AWS support to increase the limits for spot requests. The plugin creates a new spot request for every instance. So we had to raise the limit to 20, as we were able to start only one instance in the beginning.

- Configure the plugin to stop the instances 5 minutes before the end of a usage hour, if they are idle (Idle termination time: -5). This nice feature is only mentioned in help text and it is in our opinion a far better choice than the default value of 30 minutes idle time.

- Keep an eye on disconnected build agents shown in Jenkins. It happened to us a few times that Jenkins somehow lost connection and the spot request / instance was never terminated. We plan to monitor this in the future (or implement a fix for JENKINS-43774).

Bonus: Fast Builds for Feature Branches

The Parallel Test Executor plugin requires information about previous test execution times in order to split up the tests among the build agents. Therefore, it scans through test results of earlier builds of the same branch. For our pull request driven workflow, this again leads to the unacceptable two-hour feedback cycle for the first build of every new feature branch, as no previous test results can be found. To avoid this, we contributed a (yet to be merged) pull request to the plugin. The countermeasure was pretty simple: fall back to the master branch, if there's no successful build on the current branch.

Summary

By applying the described changes, we could shorten the feedback cycles by more than four times. The combination of build agents launched on demand, e.g. using the EC2 plugin, and parallel test execution increased our velocity, as we can test and deliver user stories faster. By using AWS spot instances, the monetary costs are negligible, even in the case of using 10 instances in parallel -- the costs for the coffee consumed by our team by far exceeds the item for spot instances on our AWS bill.

In case you find this interesting and like to drink coffee with us (or tea): we're hiring in our developers' HQ located in beautiful Würzburg.

The content team of emnify is specialized in all things IoT. Feel free to reach out to us if you have any question.